|

|

|

|

|

|

|

|

|

|

|

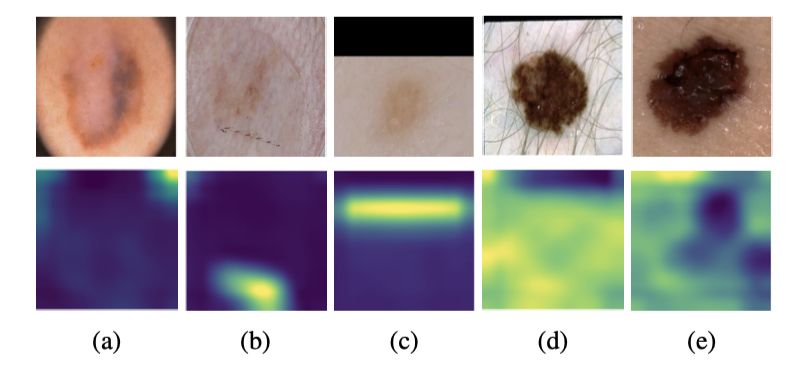

| Deep neural networks have demonstrated promising performance on image recognition tasks. However, they may heavily rely on confounding factors, using irrelevant artifacts or bias within the dataset as the cue to improve performance. When a model performs decision-making based on these spurious correlations, it can become untrustable and lead to catastrophic outcomes when deployed in the realworld scene. In this paper, we explore and try to solve this problem in the context of skin cancer diagnosis. We introduce a human-in-the-loop framework in the model training process such that users can observe and correct the model's decision logic when confounding behaviors happen. Specifically, our method can automatically discover confounding factors by analyzing the co-occurrence behavior of the samples. It is capable of learning confounding concepts using easily obtained concept exemplars. By mapping the blackbox model's feature representation onto an explainable concept space, human users can interpret the concept and intervene via first order-logic instruction. We systematically evaluate our method on our newly crafted, well-controlled skin lesion dataset and several public skin lesion datasets. Experiments show that our method can effectively detect and remove confounding factors from datasets without any prior knowledge about the category distribution and does not require fully annotated concept labels. We also show that our method enables the model to focus on clinicalrelated concepts, improving the model's performance and trustworthiness during model inference. |

Confounding Behaviors on Dermoscopic Images

|

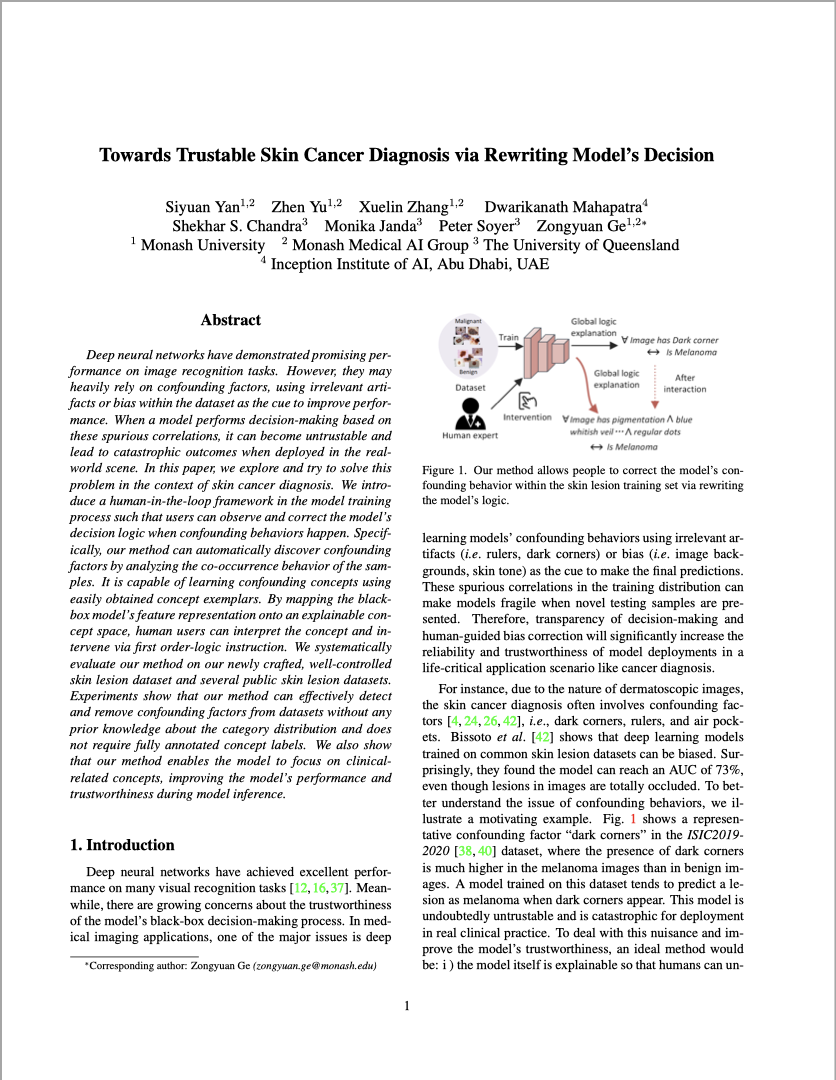

| Observed confounding concepts in ISIC2019-2020 datasets, the top row shows sample images, and the bottom row is the corresponding heatmap from GradCAM: (a) dark corners. (b) rulers. (c) dark borders. (e) dense hairs. (f) air pockets.. |

A Human-in-the-loop Pipeline to Remove Confounders

|

| Illustration of our human-in-the-loop pipeline. (a) Applying the spectral relevance analysis algorithm to discover the confounding factors within the dataset. (b) Learning confounding concept and clinical concept vectors. (c) Projecting feature representation of a model onto concept subspace and then removing the model's confounding behaviors via human interaction. Pig. denotes pigmentation, and Reg. denotes regression structure.. |

Discovered Confounding Factors

|

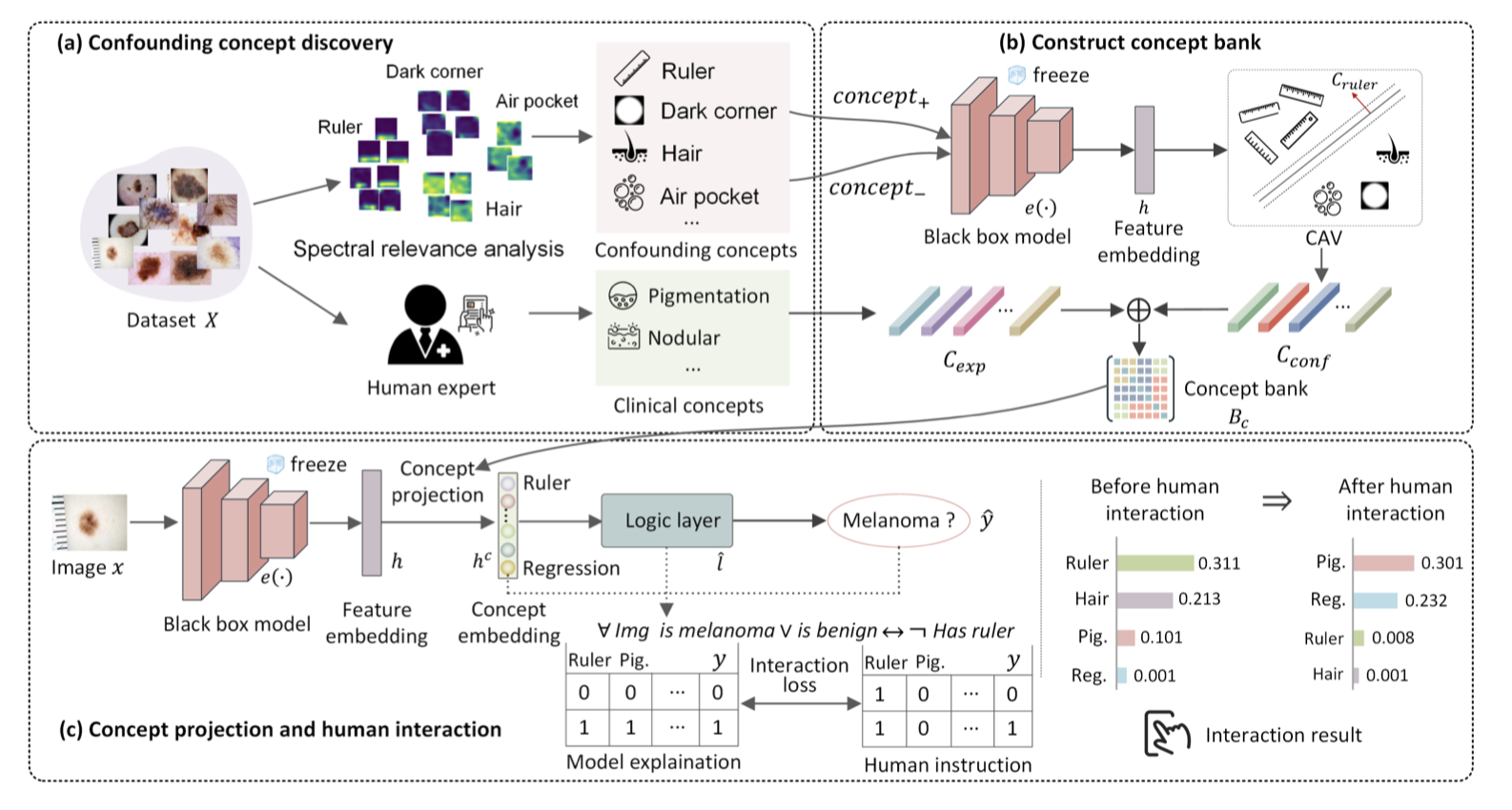

| Global analysis of the models' behavior within datasets using GCCD. The left graph is the tSNE of spectral clustering using GradCAMs of a ResNet50 within ISIC2019 and ISIC2020. The right one is the tSNE of spectral clustering using GradCAMs of a VGG16 within the SynthDermdataset. |

Global Explanation

|

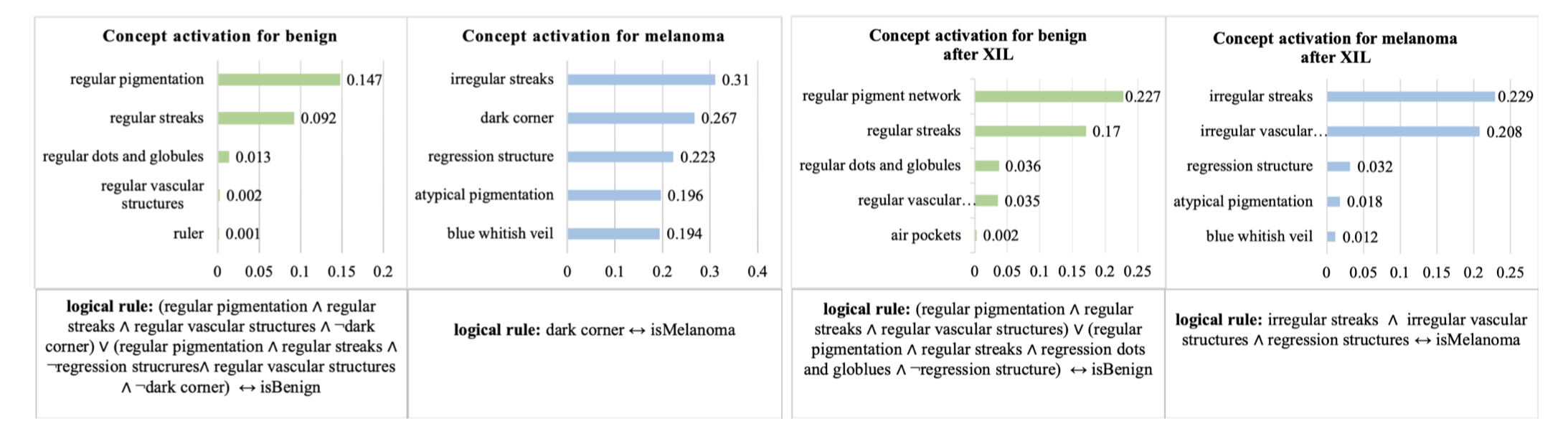

| The global explanation of the model's behavior on the melanoma (dark corners) dataset of ConfDerm. In the left two figures, either the concept activation or logical rule shows that the model is confounded by the concept of the dark corners when predicting melanoma. In the right two figures, after the interaction, the model does not predict melanoma based on the dark corners, and it predicts melanoma based on meaningful clinical concepts. |

Quantitative Result

|

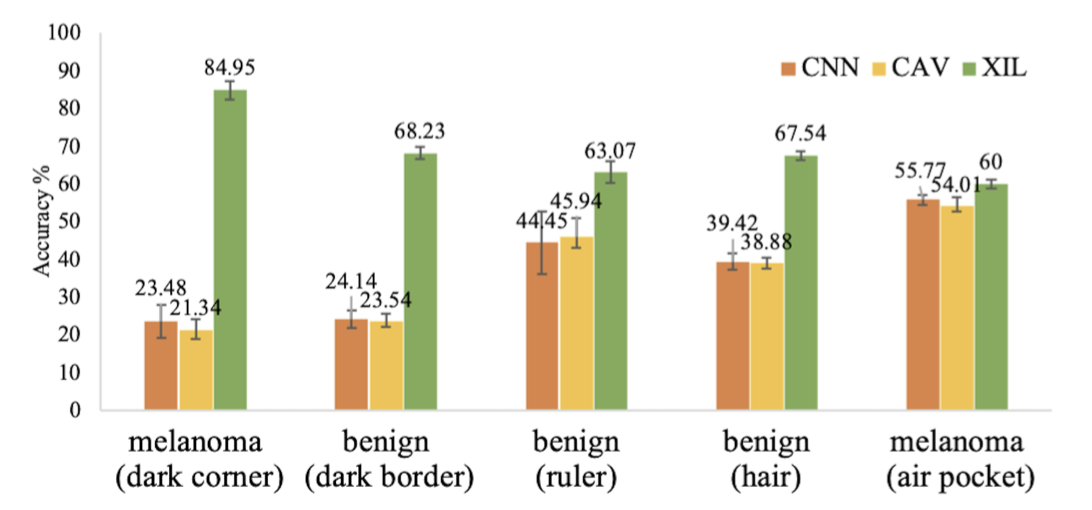

| Performance improvement on confounded class when removing different confounded classes using XIL in five confounded datasets in the ConfDerm dataset. |

ConfDerm Dataset

| We plan to release the dataset soon. Please refer to the paper to get the details of the dataset. |